Maximizing Product Success: 9 Essential Tips for Designers

Written on

Understanding Feature Impact

Incorporating new features can often complicate a product, making user onboarding more challenging. As a result, users may require additional updates and support to fully appreciate the value of these enhancements. This necessitates an investment of time from product managers to effectively showcase these features. Despite these challenges, introducing new functionalities is crucial, and it’s essential to determine whether these updates justify the resources spent. So, how can we gauge success? Collaborating with the analytics team is vital, but having a foundational understanding of the metrics involved is equally important. Here are some advanced strategies that have proven beneficial.

Section 1.1 User Segmentation and Statistical Significance

It's important to evaluate the statistical significance and various user segments when measuring the impact of new features. Depending on the product, sufficient data for an A/B test may be available sooner than expected. For instance, on day one of testing a new feature, we might already have insights into its success based on early data. However, it’s essential to analyze how different user groups interact with the feature. For example, if older users engage with the app every few days, measuring stickiness through DAU/MAU might show 30% engagement. To draw meaningful conclusions, it may be necessary to wait a week to gather more comprehensive data across different segments.

Section 1.2 Consideration of Seasonal Trends

When evaluating feature performance, be wary of seasonal influences. Comparing user engagement during pay periods (like the beginning of the month) with times when users typically spend their money (such as the end of the month) can lead to misleading conclusions.

Section 1.3 Testing Hypotheses through Advertisements

Testing ideas via ads is often quicker and more economical than implementing them directly into the product. By showcasing different features in advertisements, you can gauge user interest before committing development resources. If users respond positively to a specific feature in an ad, consider following up with a message that acknowledges their interest and perhaps offers a small incentive, such as a promo code. This can also be an opportunity to gather contact information for further customer development.

Section 1.4 Identifying Key Features

When multiple features are released simultaneously, it can be challenging to pinpoint which ones drive metric changes. To address this, I conduct controlled tests by isolating each feature and monitoring key metrics. Ideally, features should be tested sequentially during initial rollouts to accurately measure their individual impacts.

Section 1.5 Multi-Channel Considerations

While optimizing for specific metrics, such as conversion rates, it’s crucial not to overlook how these optimizations can impact other areas. For instance, reducing the number of questions in a signup form may boost conversion rates but could also decrease the quality of leads. Balancing marketing and sales metrics is essential to ensure that both departments can thrive, ultimately driving revenue growth.

Bonus Tip: Collecting Data from Engaged Users

Users unfamiliar with your product may provide unreliable signup information. In contrast, those who have invested time in using the product are more likely to share accurate data. Instead of requesting detailed information at initial signup, consider asking for it after users have engaged with the product for a while.

Product Metrics for Product Designers - The Ultimate Guide This video offers insights into critical metrics that product designers should focus on to measure the success of their features and overall product performance.

Section 1.6 Expanding Feedback Sources

The user base of a product evolves over time, and it’s vital to gather feedback from a diverse audience, not just core users. Understanding how different demographics interact with the product can highlight potential gaps. For instance, during significant global events like COVID-19, many users shifted their focus toward new product categories such as time management and home entertainment tools.

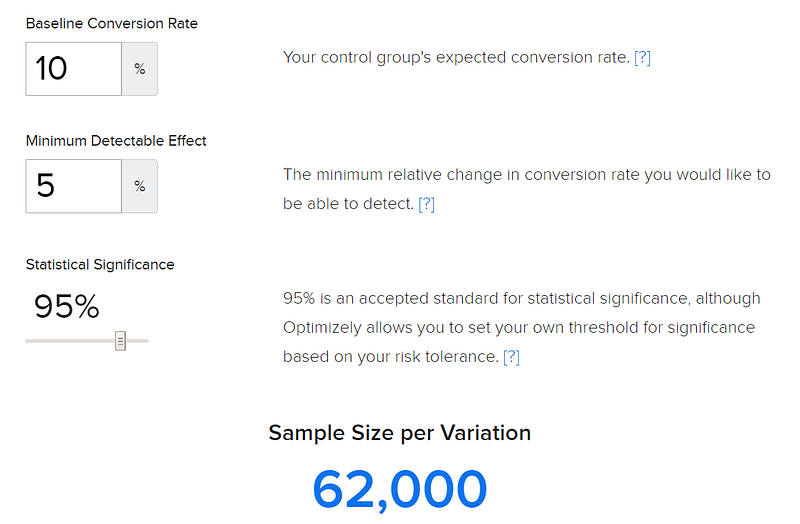

Section 1.7 Prioritizing Impactful Hypotheses

While it may be tempting to run numerous tests, it’s crucial to prioritize those with the potential for a significant impact. If your testing resources are limited, focus on hypotheses that could yield a detectable change in key performance indicators (KPIs). A sample size calculator can aid in determining the minimum detectable effect necessary for valid results.

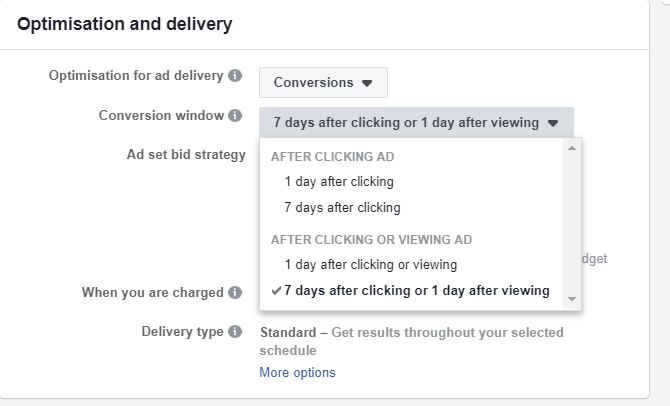

Section 1.8 Understanding Conversion Windows

When measuring the effects of new experiments on user payments, it’s important to account for the time it takes for users to make purchases. Users may not buy on their first visit; thus, establishing a conversion window can help accurately assess the impact of changes.

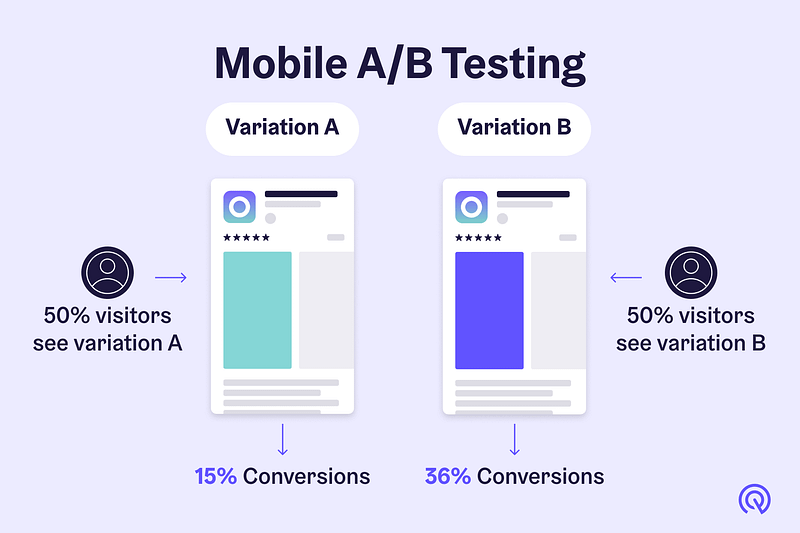

Section 1.9 Timing and Audience Segmentation

To evaluate experiment results effectively, utilize A/B testing instead of simply comparing metrics before and after the experiment. Variations in audience sources can lead to misinterpretation of results, as different user segments may exhibit distinct behaviors.

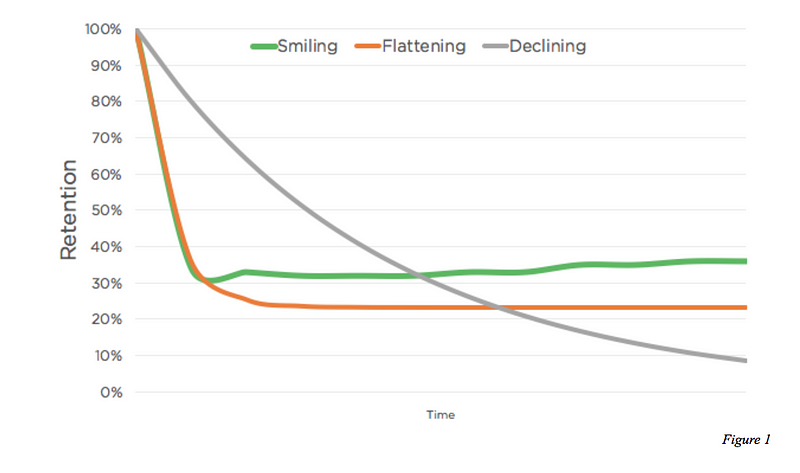

Section 1.10 Feature Retention Analysis

Analyzing the retention rates of specific features can provide valuable insights into user preferences. If one feature is utilized by 30% of users on day seven while another is only used by 10%, it suggests differing levels of utility. Ensure that features are equally discoverable within the product for a fair comparison.

How Design System Leaders Measure and Boost Adoption This video discusses strategies for leaders in design systems to effectively measure and enhance feature adoption within their codebases.

Stay connected! Feel free to follow me on Dribbble and reach out via LinkedIn. Cheers!