Exploring Natural Language Processing: A Comprehensive Guide

Written on

Chapter 1: Introduction to Natural Language Processing

Natural Language Processing (NLP) is arguably one of the most prominent domains within data science. Over the last ten years, it has gained significant attention in both corporate settings and academic circles. However, it's worth noting that NLP is not a novel discipline. The aspiration for computers to grasp and interpret human languages has existed since the inception of computing technology—back in the days when computers struggled to perform basic tasks.

When we refer to natural language processing, we are talking about any human language such as English, Arabic, or Spanish. The challenge lies in the intricate nature of these languages. For instance, variations in pronunciation, regional accents, and informal speech patterns can complicate matters. Moreover, the rapid emergence of new slang can further complicate communication.

This article aims to illuminate the origins of NLP and explore its various subfields. So, how did NLP come to be?

Section 1.1: The Interdisciplinary Nature of NLP

NLP is a field that merges computer science with linguistics. To illustrate this, let's focus on the English language, which offers a seemingly limitless array of sentence structures. While humans can easily discern between grammatically correct and incorrect sentences, computers struggle with this distinction. It is impractical to provide a computer with a comprehensive dictionary containing every conceivable sentence in every language.

So, what is the solution?

In the early days of NLP, researchers suggested breaking down sentences into individual words for easier processing. This method mirrors the way humans learn languages, whether as children or as adults acquiring a new language.

Typically, we learn the fundamental components of a language first, such as the nine parts of speech in English: nouns, verbs, adjectives, adverbs, pronouns, articles, etc. These elements help clarify the role of each word within a sentence. However, knowing the category of a word is insufficient, especially when dealing with words that have multiple meanings. For instance, "leaves" can refer to either the plural of "leaf" or the verb form meaning "to depart."

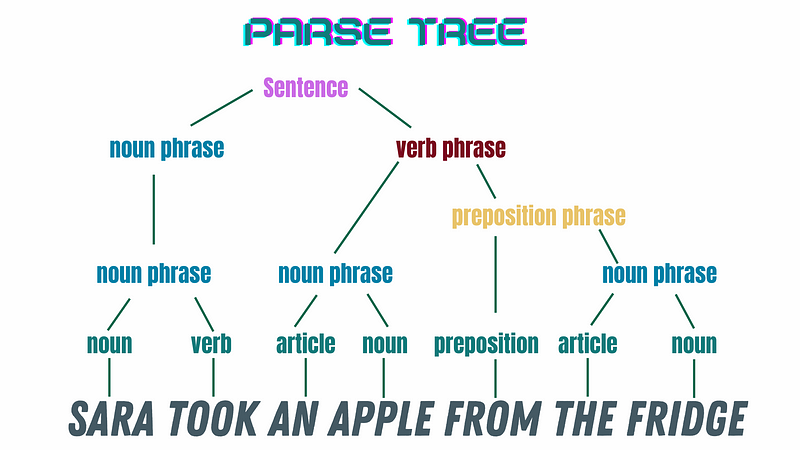

Thus, it became imperative for computers to understand basic grammar rules to alleviate confusion regarding ambiguous words. This necessity led to the establishment of phase structure rules.

Subsection 1.1.1: Understanding Phrase Structure Rules

In essence, these rules consist of grammatical principles that dictate how sentences are constructed. For example, in English, a sentence can be formed by combining a noun clause with a verb clause, as in "She ate the apple." Here, "she" functions as the noun clause, while "ate the apple" represents the verb clause. By employing various structure rules, we can create parse trees that categorize each word within a sentence, ultimately leading us to the sentence's overall meaning.

Chapter 2: Challenges of Natural Language Complexity

While this methodology works well for straightforward sentences, complexities arise with longer or more convoluted statements. In such cases, computers can struggle to comprehend the intended meaning.

Section 2.1: Subfields of NLP

Text Processing

Chatbots serve as a prime example of NLP in action. Early iterations of chatbots relied on rule-based systems, requiring scientists to create extensive lists of phrase rules to determine appropriate responses to user inputs. One notable example from the 1960s is Eliza, a chatbot designed to mimic a therapist.

In contrast, contemporary chatbots and virtual assistants utilize advanced machine learning techniques, drawing on vast datasets of human conversations. The more data these models are trained on, the more proficient they become.

Speech Recognition

While chatbots excel at understanding written language, how do computers interpret spoken words? This leads us to the second subfield of NLP: speech recognition. Although this technology is not new, it has garnered significant research attention over the years. The first successful speech recognition program, Harpy, was created at Carnegie Mellon University in 1971 and could comprehend 1,000 words.

Initially, the limitations of computer processing power made real-time speech recognition nearly impossible—unless the speaker talked exceedingly slowly. However, advancements in computing technology have since overcome these challenges.

Speech Synthesis

Conversely, speech synthesis involves enabling computers to produce vocal sounds. In both speech recognition and chatbots, sentences are segmented into phonetic components. These phonetics can be stored, rearranged, and reproduced by computers to articulate specific phrases.

The earliest speech synthesis machine was introduced in 1937 by Bell Labs and was operated manually. Over time, the methods for collecting and assembling phonetics have evolved significantly. Although modern algorithms have improved the quality of synthesized speech, it often still retains a robotic quality. Recent virtual assistants like Siri, Cortana, and Alexa showcase the progress made in this area, yet they still fall short of sounding entirely human.

Takeaways

Natural language processing stands as one of the most vital fields in data science, enabling computers to understand and generate human languages. It encompasses a range of subfields, including text processing, speech recognition, and speech synthesis. These categories frequently rely on machine learning models, particularly neural networks, and extensive datasets of human interactions. However, variations in languages and accents can affect accuracy, highlighting the need for tailored language models to enhance performance.

This article marks the beginning of a series exploring various facets of NLP, from its history and fundamental categories to practical applications and the current state of research. Stay tuned for more insights!

In this video, "Natural Language Processing 101 - EASILY EXPLAINED," viewers will gain a foundational understanding of NLP concepts and applications.

The second video, "NLP 101 - What Does NLP Mean?" delves into the meaning and significance of natural language processing in today's technological landscape.